The EBCDIC File Viewer is a translator from the IBM EBCDIC encoding to ASCII. It is usefull when you have to process plain data from the Host without any linebreak. When you open a file it automatically tries to find out the fixed length width for files without linebreak. Also the viewer checks whether your file is EBCDIC or ASCII encoded. This link provides a View of the COBOL Source Code and Copy File that does a file format and record content conversion from an ASCII/Text file to an EBCDIC encoded indexed file. Summary This is an example of how a COBOL program can read an ASCII/Text file and write an EBCDIC-Indexed file.

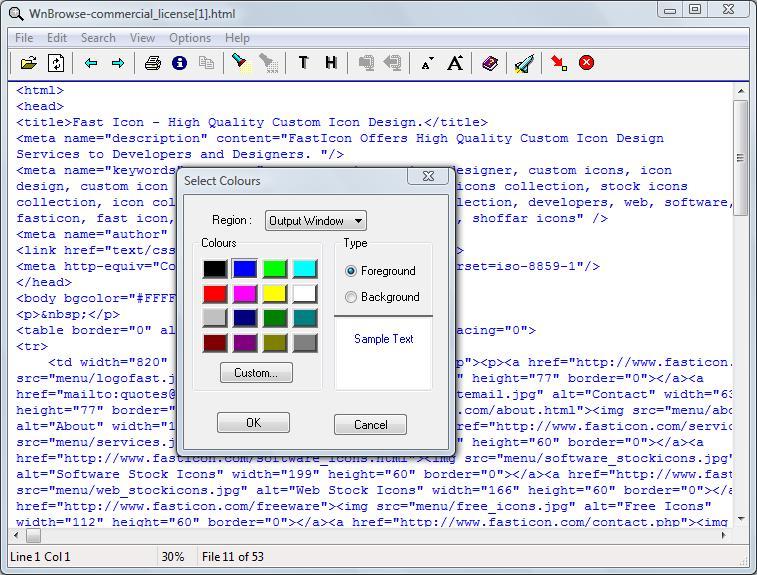

Edit any file text, ebcdic, data, binary, hex and more. File type text encoding settings. Vedit converts between many file types. Convert files encoding with aap salamander. Convert your text files from any encoding to any other one. This is the resu on my netbean s ide console. Appendix f ascii and ebcdic characters optimum data inc. Viewing the contents of XMIT and AWS Files. You can view a file by double-clicking on it in the AWS/XMIT file list. You can also extract any of the files just as you would extract files from a ZIP archive. The screenshot below shows V displaying an EBCDIC file contained in an XMI archive. This command line utility is a codepage converter to be used to change the character encoding of text. It fully supports charsets such as ANSI code pages, UTF-8, UTF-16 LE/BE, UTF-32 LE/BE, and EBCDIC. It's designed to convert big text files, too. It runs on Windows XP onwards (tested on XP, Windows 7, Windows 8.1, and Windows 10).

Latest versionReleased:

Additional EBCDIC codecs

Project description

ebcdic is a Python package adding additional EBCDIC codecs for dataexchange with legacy system. It works with Python 2.7 and Python 3.4+.

EBCDIC is short for Extended BinaryCoded Decimal Interchange Code and is a family of character encodings that ismainly used on mainframe computers. There is no real point in using it unlessyou have to exchange data with legacy systems that still only support EBCDICas character encoding.

Installation

The ebcdic package is available from https://pypi.python.org/pypi/ebcdicand can be installed using pip:

Example usage

To encode 'hello world' on EBCDIC systems in German speaking countries,use:

Supported codecs

The ebcdic package includes EBCDIC codecs for the following regions:

- cp290 - Japan (Katakana)

- cp420 - Arabic bilingual

- cp424 - Israel (Hebrew)

- cp833 - Korea Extended (single byte)

- cp838 - Thailand

- cp870 - Eastern Europe (Poland, Hungary, Czech, Slovakia, Slovenia,Croatian, Serbia, Bulgarian); represents Latin-2

- cp1097 - Iran (Farsi)

- cp1140 - Australia, Brazil, Canada, New Zealand, Portugal, South Africa,USA

- cp1141 - Austria, Germany, Switzerland

- cp1142 - Denmark, Norway

- cp1143 - Finland, Sweden

- cp1144 - Italy

- cp1145 - Latin America, Spain

- cp1146 - Great Britain, Ireland, North Ireland

- cp1147 - France

- cp1148 - International

- cp1148ms - International, Microsoft interpretation; similar to cp1148except that 0x15 is mapped to 0x85 (“next line”) instead if 0x0a(“linefeed”)

- cp1149 - Iceland

It also includes legacy codecs:

- cp037 - Australia, Brazil, Canada, New Zealand, Portugal, South Africa;similar to cp1140 but without Euro sign

- cp273 - Austria, Germany, Switzerland; similar to cp1141 but without Eurosign

- cp277 - Denmark, Norway; similar to cp1142 but without Euro sign

- cp278 - Finland, Sweden; similar to cp1143 but without Euro sign

- cp280 - Italy; similar to cp1141 but without Euro sign

- cp284 - Latin America, Spain; similar to cp1145 but without Euro sign

- cp285 - Great Britain, Ireland, North Ireland; similar to cp1146 butwithout Euro sign

- cp297 - France; similar to cp1147 but without Euro sign

- cp500 - International; similar to cp1148 but without Euro sign

- cp500ms - International, Microsoft interpretation; identical tocodecs.cp500 similar to ebcdic.cp500 except that 0x15 is mapped to 0x85(“next line”) instead if 0x0a (“linefeed”)

- cp871 - Iceland; similar to cp1149 but without Euro sign

- cp875 - Greece; similar to cp9067 but without Euro sign and a fewother characters

- cp1025 - Cyrillic

- cp1047 - Open Systems (MVS C compiler)

- cp1112 - Estonia, Latvia, Lithuania (Baltic)

- cp1122 - Estonia; similar to cp1157 but without Euro sign

- cp1123 - Ukraine; similar to cp1158 but without Euro sign

Codecs in the standard library overrule some of these codecs. At the time ofthis writing this concerns cp037, cp273 (since 3.4), cp500 and cp1140.

To see get a list of EBCDIC codecs that are already provided by differentsources, use ebcdic.ignored_codec_names(). For example, with Python 3.6the result is:

Unsupported codecs

According to acomprehensive list of code pages,there are additional codecs this package does not support yet. Possiblereasons and solutions are:

Ebcdic File Viewer Download

- It’s a double byte codec, e.g. cp834 (Korea). Technically CodecMappercan easily support them by increasing the mapping size from 256 to 65536.Due lack of test date and access to Asian mainframes this was deemed tooexperimental for now.

- The codec contains combining characters, e.g. cp1132 (Lao) which allowsto represent more than 256 characters combining several characters.

- Java does not include a mapping for the respective code page, e.g.cp410/880 (Cyrillic). You can add such a codec based on the informationfound at the link above and submit an enhancement request for the Javastandard library. Once it is released, simply add the new codec tothe build.xml as described below.

- I missed a codec. Simply open an issue on Github athttps://github.com/roskakori/CodecMapper/issues and it will be added withthe next version.

Source code

These codecs have been generated using CodecMapper, available fromhttps://github.com/roskakori/CodecMapper. Read the README in order toto build the ebcdic package from source.

To add another 8 bit EBCDIC codec just extend the ant target ebcdic inbuild.xml using a line like:

Replace XXX by the number of the 8 bit code page you want to include.

Then run:

to build and test the distribution.

The ebcdic/setup.py automatically includes the new encoding in the packageand ebcdic/__init__.py registers it during import ebcdic, so nofurther steps are needed.

Changes

Version 1.1.1, 2019-08-09

Moved license information from README to LICENSE (#5). This required thedistribution to change from sdist to wheel because apparently it is amajor challenge to include a text file in a platform independent way (#11).

Sadly this breaks compatibility with Python 2.6, 3.1, 3.2 and 3.3. If youstill need ebcdic with one of these Python versions, useebcdic-1.0.0.

This took several attempts and intermediate releases that where broken indifferent ways on different platforms. To prevent people from accidentallyinstalling one of these broken releases they have been removed from PyPI.If you still want to take a look at them, use therespective tags.

Version 1.0.0, 2019-06-06

- Changed development status to “Production/Stable”.

- Added international code pages cp500ms and cp1148ms which are the Microsoftinterpretations of the respective IBM code pages. The only difference isthat 0x1f is mapped to 0x85 (“next line”) instead of 0x0a (“new line”).Note that codecs.cp500 included with the Python standard library also usesthe Microsoft interpretation (#4).

- Added Arabian bilingual code page 420.

- Added Baltic code page 1112.

- Added Cyrillic code page 1025.

- Added Eastern Europe code page 870.

- Added Estonian code pages 1122 and 1157.

- Added Greek code page 875.

- Added Farsi Bilingual code page 1097.

- Added Hebrew code page 424 and 803.

- Added Korean code page 833.

- Added Meahreb/French code page 425.

- Added Japanese (Katakana) code page 290.

- Added Thailand code page 838.

- Added Turkish code page 322.

- Added Ukraine code page 1123.

- Added Python 3.5 to 3.8 as supported version.

- Improved PEP8 conformance of generated codecs.

Version 0.7, 2014-11-17

- Clarified which codecs are already part of the standard library and thatthese codecs overrule the ebcdic package. Also added a functionebcdic.ignored_codec_names() that returns the name of the EBCDIC codecsprovided by other means. To obtain access to ebcdic codecs overruled bythe standard library, use ebcdic.lookup().

- Cleaned up (PEP8, __all__, typos, …).

Version 0.6, 2014-11-15

- Added support for Python 2.6+ and 3.1+ (#1).

- Included a modified version of gencodec.py that still builds mapsinstead of tables so the generated codecs work with Python versions earlierthan 3.3. It also does a from __future__ import unicode_literals so thecodecs even work with Python 2.6+ using the same source code. As a sideeffect, this simplifies building the codecs because it removes the the needfor a local copy of the cpython source code.

Version 0.5, 2014-11-13

- Initial public release

Release historyRelease notifications | RSS feed

1.1.1

1.0.0

0.7.0

0.6.0

0.5.0

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

| Filename, size | File type | Python version | Upload date | Hashes |

|---|---|---|---|---|

| Filename, size ebcdic-1.1.1-py2.py3-none-any.whl (128.5 kB) | File type Wheel | Python version 3.6 | Upload date | Hashes |

Hashes for ebcdic-1.1.1-py2.py3-none-any.whl

| Algorithm | Hash digest |

|---|---|

| SHA256 | 33b4cb729bc2d0bf46cc1847b0e5946897cb8d3f53520c5b9aa5fa98d7e735f1 |

| MD5 | 68ae042f2c36ab2a4f9c664a657a6478 |

| BLAKE2-256 | 0d2f633031205333bee5f9f93761af8268746aa75f38754823aabb8570eb245b |

Open Ebcdic File Viewer

In the first article in this series, we introduced EBCDIC code pages, and how they work with z/OS. But z/OS became more complicated when UNIX Systems Services (USS) introduced ASCII into the mix. Web-enablement and XML add UTF-8 and other Unicode specifications as well. So how do EBCDIC, ASCII and Unicode work together?

If you are a mainframe veteran like me, you work in EBCDIC. You use a TN3270 emulator to work with TSO/E and ISPF, you use datasets, and every program is EBCDIC. Or at least that's the way it used to be.

UNIX Systems Services (USS) has changed all that. Let's take a simple example. Providing it has been setup by the Systems Programmer, you can now use any Telnet client such as PuTTY to access z/OS using telnet, SSH or rlogin. From here, you get a standard UNIX shell that will feel like home to anyone who has used UNIX on any platform. telnet, SSH and rlogin are ASCII sessions. So z/OS (or more specifically, TCP/IP) will convert everything going to or from that telnet client between ASCII and EBCDIC.

Like EBCDIC, expanded ASCII has different code pages for different languages and regions, though not nearly as many. Most English speakers will use the ISO-8859-1 ASCII. If you're from Norway, you may prefer ISO-8859-6, and Russians will probably go for ISO-8859-5. In UNIX, the ASCII character set you use is part of the locale, which also includes currency symbol and date formats preferred. The locale is set using the setenv command to update the LANG or LC_* environment variables. You then set the Language on your Telnet client to the same, and you're away. Here is how it's done on PuTTY.

From the USS shell on z/OS, this is exactly the same (it is a POSIX compliant shell after all). So to change the locale to France, we use the USS setenv command: The first two characters are the language code specified in the ISO 639-1 standard and the second two the country code from ISO 3166-1.

Converting to and from ASCII on z/OS consumes resources. If you're only working with ASCII data, it would be a good idea to store the data in ASCII, and avoid the overhead of always converting between EBCDIC and ASCII.The good news is that this is no problem. ASCII is also a Single Byte Character Set (SBCS), so all the z/OS instructions and functions work just as well for ASCII as they do for EBCDIC. Database managers generally just store bytes. So if you don't need them to display the characters in a readable form on a screen, you can easily store ASCII in z/OS datasets, USS files and z/OS databases.

The problem is displaying that information. Using ISPF to edit a dataset with ASCII data will show gobbldy-gook - unreadable characters. ISPF browse has similar problems.

With traditional z/OS datasets, there's nothing you can do. However z/OS USS files have a tag that can specify the character set that used. For example, look at the ls listing of the USS file here:

You can see that the code page used is IBM-1047 - the default EBCDIC. The t to the left of the output indicates that the file holds text, and T=on indicates that the file holds uniformly encoded text data. However here is another file:

The character set is ISO8859-1: extended ASCII for English Speakers. This tag can be set using the USS ctag command. It can also be set when mounting a USS file system, setting the default tag for all files in that file system.

This tag can be used to determine how the file will be viewed. From z/OS 1.9, this means that if using ISPF edit or browse to access a dataset, ASCII characters will automatically be displayed if this tag is set to CCSID 819 (ISO8859-1). The TSO/E OBROWSE and OEDIT commands, ISPF option 3.17, or the ISHELL interface all use ISPF edit and browse.

If storing ASCII in traditional z/OS datasets, ISPF BROWSE and EDIT will not automatically convert from ASCII. Nor will it convert for any other character set other than ISO8859-1. However you can still view the ASCII data using the SOURCE ASCII command. Here is an example of how this command works.

Some database systems also store the code page. For example, have a look at the following output from the SAS PROC _CONTENTS procedure. This shows the definitions of a SAS table. You can see that it is encoded in EBCDIC 1142 (Denmark/Norway):

DB2 also plays this kind of ball. Every DB2 table can have an ENCODING_SCHEME variable assigned in SYSIBM.TABLES which overrides the default CCSID. This value can also be overridden in the SQL Descriptor Area for SQL statements, or in the stored procedure definition for stored procedures. You can also override specify CCSID when binding an application, or in the DB2 DECLARE or CAST statements.

Or in other words, if you define tables and applications correctly, DB2 will do all the translation for you.

Unicode has one big advantage over EBCDIC and ASCII: there are no code pages. Every character is represented in the same table. And the Standards people have made sure that Unicode has enough room for a lot more characters - even the Star Trek Klingon language characters get a mention.

But of course this would be too simple. There are actually a few different Unicodes out there:

- UTF-8: Multi-Byte character set, though most characters are just one byte. The basic ASCII characters (a-z, A-Z, 0-9) are the same.

- UTF-16: Multi-byte characters set, though most characters are two bytes.

- UTF-32: Each character is four bytes.

Ebcdic File Viewer

Most high level languages have some sort of Unicode support, including C, COBOL and PL/1. However you need to tell these programs that you're using Unicode in compiler options or string manipulation options.

Ebcdic File Viewer Online

z/OS also has instructions for converting between Unicode, UTF-8, UTF-16 and UTF-32. By Unicode, IBM means Unicode Basic Latin: the first 255 characters of Unicode - which fit into one byte.

A problem with anything using Unicode on z/OS is that it can be expensive in terms of CPU use. To help out, IBM has introduced some new Assembler instructions oriented towards Unicode. Many of the latest high level language compilers use these when working with Unicode instructions - making these programs much faster. If you have a program that uses Unicode and hasn't been recompiled for a few years, consider recompiling it. You may see some performance improvements.

z/OS is still EBCDIC and always will be. However IBM has realised that z/OS needs to talk to the outside world, and so other character encoding schemes like ASCII and Unicode need to be supported - and are.

z/OS USS files have an attribute to tell you the encoding scheme, and some databases systems like SAS and DB2 also give you an attribute to set. Otherwise, you need to know yourself how the strings are encoded.

Ebcdic File Viewer Free

This second article has covered how EBCDIC, ASCII and Unicode work together with z/OS. In the final article in this series of three. I'll look at converting between them.